AI has been progressing faster than we all suspected. Though it has so far been restricted to software, the first hardware devices centred around AI are arriving on the market.

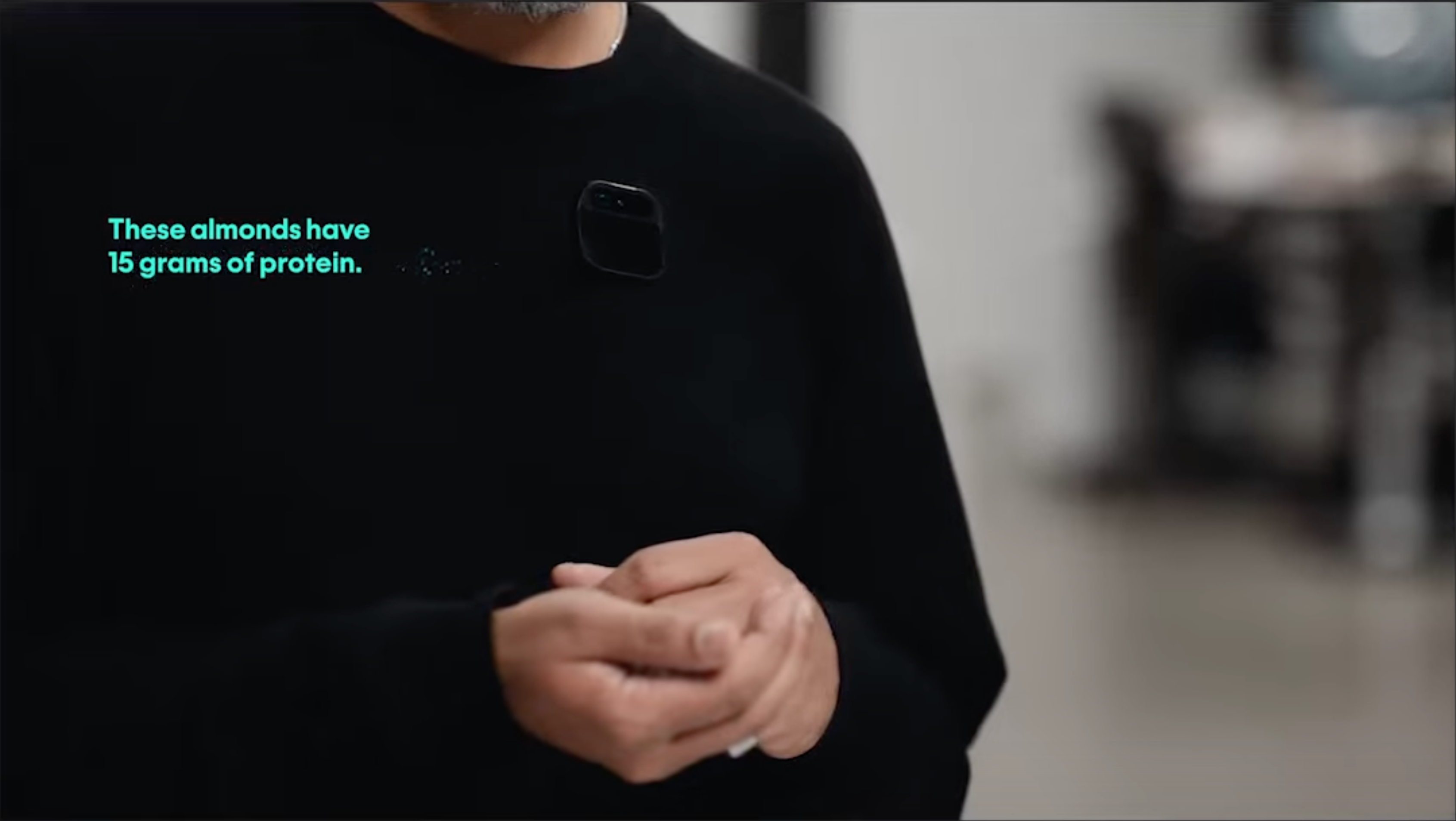

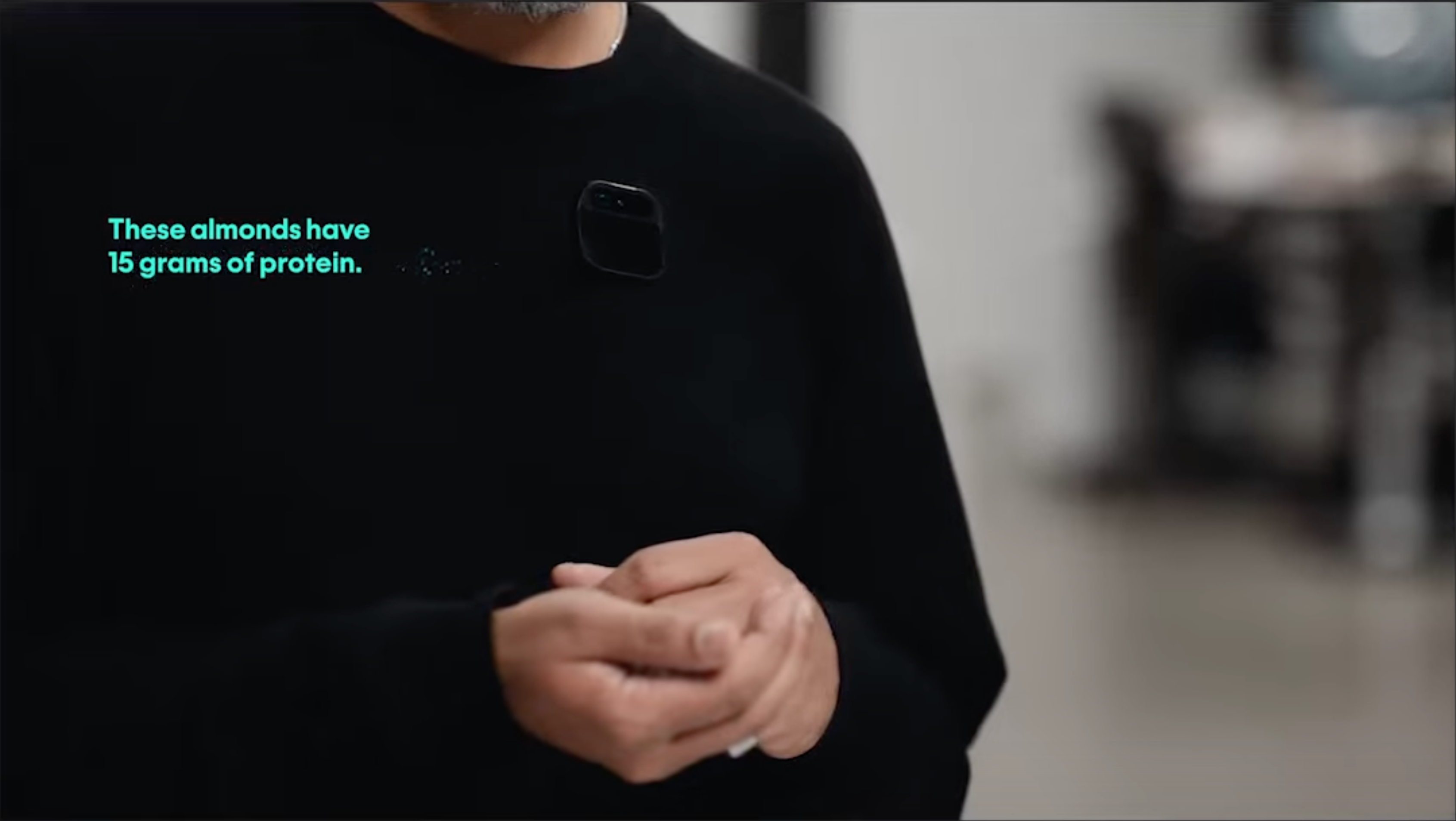

The Humane’s AI Pin is one of these devices. Powered by GPT-4, OpenAI's latest large language model, the AI Pin is described by Humane's founder Imran Chaudhri, as a “seamless, screenless and sensing experience “The Humane’s AI Pin is one of these devices. Powered by GPT-4, OpenAI's latest large language model, the AI Pin is described by Humane's founder Imran Chaudhri, as a “seamless, screenless and sensing experience “ 1 Chaudhri, Imran. “The Disappearing Computer - and a World Where You Can Take Ai Everywhere.” Imran Chaudhri: The disappearing computer - and a world where you can take AI everywhere | TED Talk, April 2023. https://www.ted.com/talks/imran_chaudhri_the_disappearing_computer_and_a_world_where_you_can_take_ai_everywhere. . The palm-sized device is fitted to the user's clothing at chest-level. Equipped with microphones, speakers, a camera and a projector, it is the first generation of conversational AI that accompanies you through every step of your daily life 2 Humane Inc. “Aipin Tech Details.” AiPin Tech Details, 2023. https://hu.ma.ne/aipin/tech-details. . Dreamed up by a team of former designers at Apple, it is their version of the “next big thing” replacing smartphones.

But when new technology like AI is in its early stages of development, we run into a conundrum known as the Collingridge Dilemma, where “it is still possible to influence the direction of its development, but we do not know yet how it will affect society. Yet, when the technology has become societally embedded, we do know its implications, but it is very difficult to influence its development” 3 Kudina, Olya, and Peter-Paul Verbeek. “Ethics from within: Google Glass, the Collingridge Dilemma, and the Mediated Value of Privacy.” Science, Technology, & Human Values 44, no. 2 (2018): 291–314. https://doi.org/10.1177/0162243918793711. .

Sam Altman, the founder of OpenAI, hinted at this dilemma at the WSJ 2023 Conference. While stressing that “it would be a moral failing not to go pursue [AI] for humanity,” he cautioned that “[as] with other technologies, we've got to address the downsides that come along with this.” His approach, he said, was to be ”thoughtful about the risks”, “try to measure what the capabilities are”, and “build your own technology in a way that mitigates those risks” 4 Stern, Joanna, Mira Murati, and Sam Altman. “A Conversation with OpenAI’s Sam Altman and Mira Murati.” WSJ, October 20, 2023. https://open.spotify.com/episode/6JE1wksSOkMApOuwqiN9BK?si=126807ed409a427b. .

Humane’s CEO Imran Chaudhri more indirectly acknowledges this conundrum during the first-ever public demo of the Human AI Pin during a TED Talk by mentioning, “As AI advances, we will see how it will transform every aspect of our lives in ways that seem unimaginable right now” 5 Chaudhri, Imran. “The Disappearing Computer - and a World Where You Can Take Ai Everywhere.” Imran Chaudhri: The disappearing computer - and a world where you can take AI everywhere | TED Talk, April 2023. https://www.ted.com/talks/imran_chaudhri_the_disappearing_computer_and_a_world_where_you_can_take_ai_everywhere. .

Yet as ever, words speak louder than actions. Instead of trying to anticipate these changes, Humane seems to place the burden of exploring these unimagineables to the consumer as part of a sociotechnical experiment. Unimagineables that, according to van de Poel, “are inherent in social changes induced by technological development” 6 Poel, Ibo van de. “An Ethical Framework for Evaluating Experimental Technology.” Science and Engineering Ethics 22, no. 3 (2015): 667–86. https://doi.org/10.1007/s11948-015-9724-3. .

Taking a page straight from the Google Glass playbook, Humane presented their technology in high fashion shows in New York and Paris 7, Dugdale, Addy. “Google Glass Hits the Runway at Fashion Week - Fast Company.” Fast Company, September 10, 2012. https://www.fastcompany.com/3001142/google-glass-hits-runway-fashion-week. 8 Humane Inc. “Humane X Coperni.” Humane x Coperni, 2023. https://hu.ma.ne/media/humanexcoperni. There are other parallels between the 2012 Google Glasses and Humane’s 2023 AI Pin that can shed light on some of these unimagineables. Both Google Glasses and Humane’s AI Pin are products that made novel technologies available to end users for the first time in a hardware product. Furthermore, Google’s description of its Glasses as “augment[ing] human perception by providing an additional layer of information that blurs the boundary between the public and the private in new ways” 9 Kudina, Olya, and Peter-Paul Verbeek. “Ethics from within: Google Glass, the Collingridge Dilemma, and the Mediated Value of Privacy.” Science, Technology, & Human Values 44, no. 2 (2018): 291–314. https://doi.org/10.1177/0162243918793711. can be directly transposed to Humane’s AI Pin.

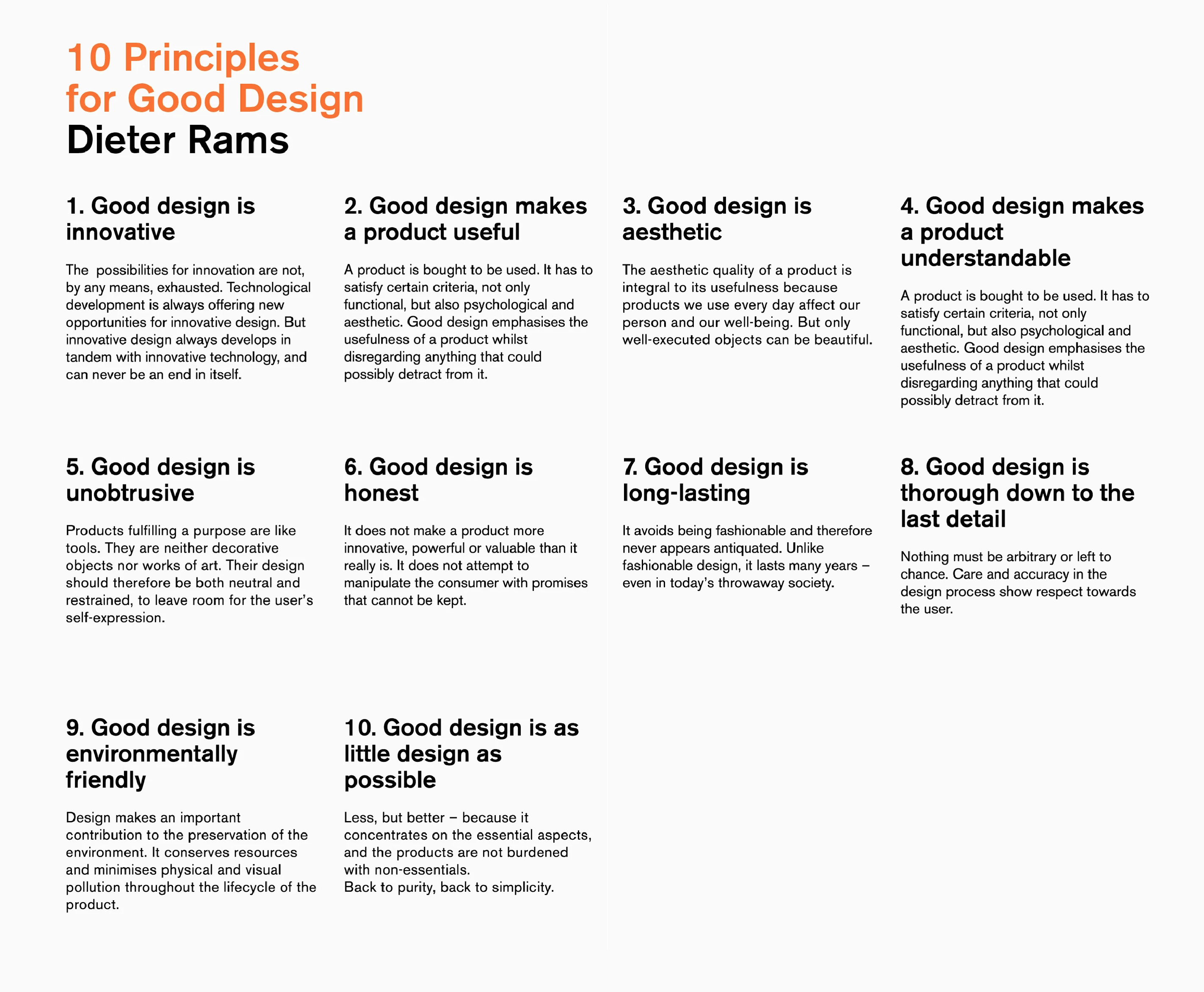

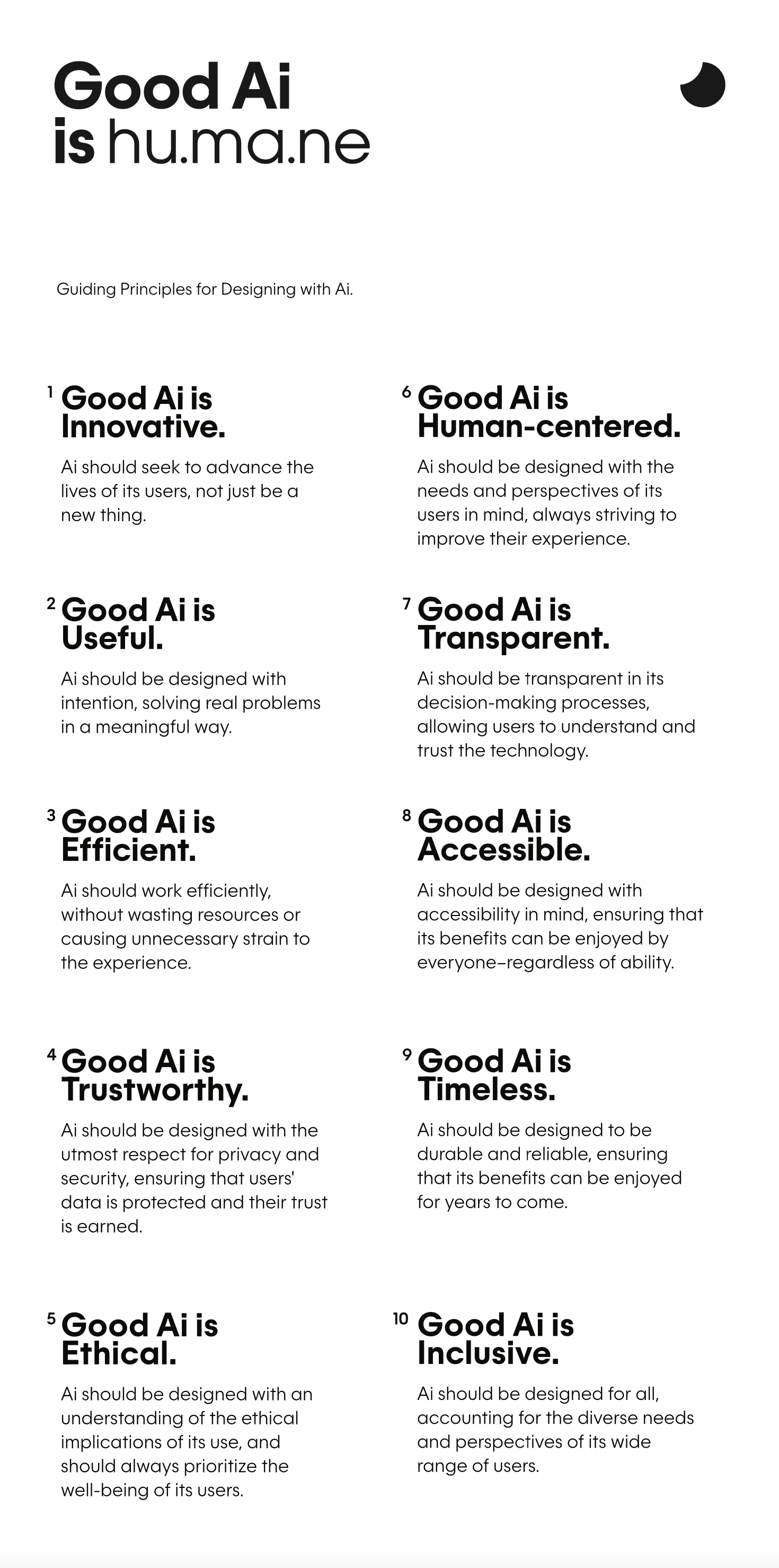

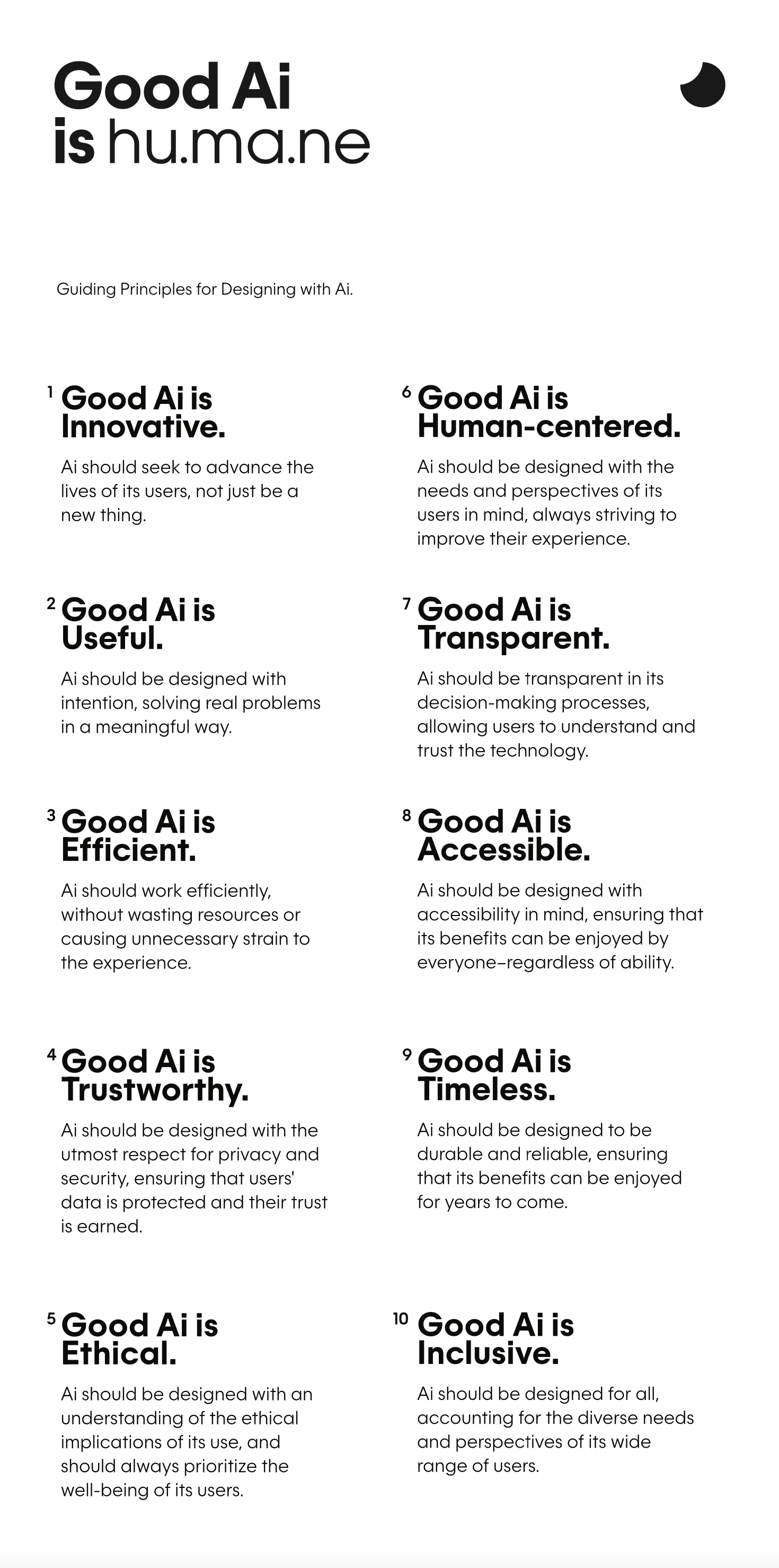

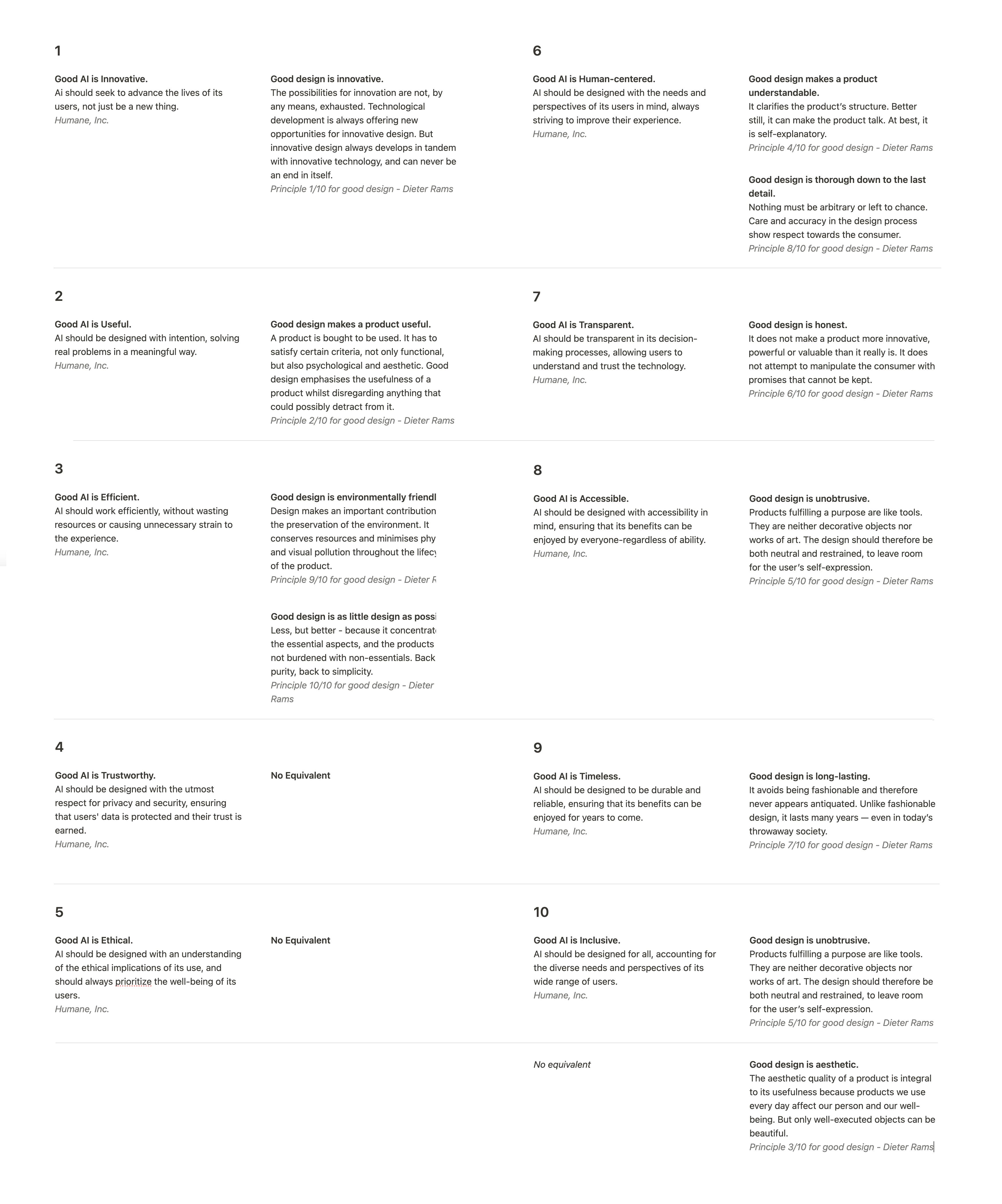

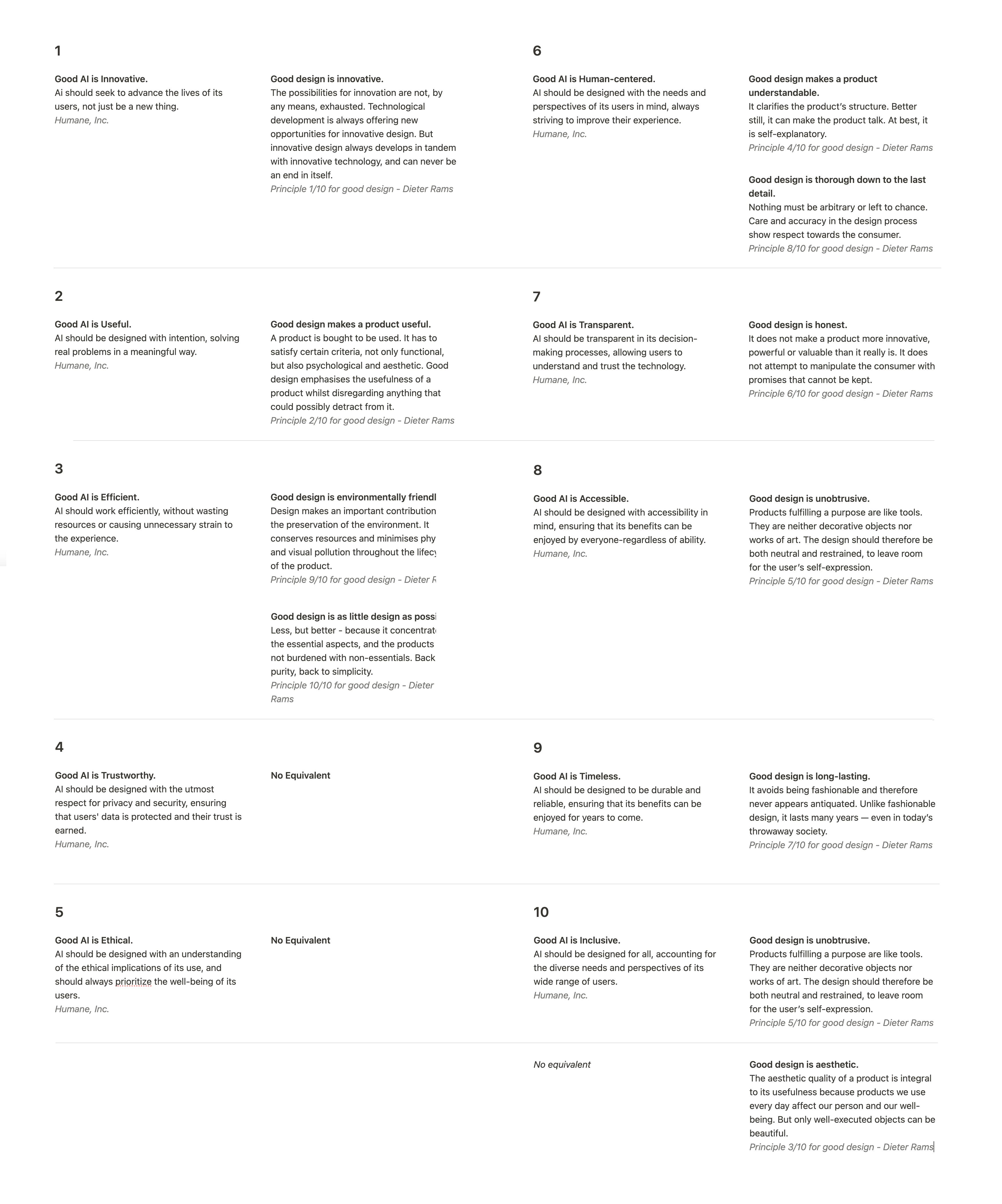

However, Google Glass ultimately failed as a consumer good. To end its similarities with the Google Glass before the AI Pin reaches this stage, Humane has published Ten Principles for Good AI as the strategy for its success 10 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . Given that many of Humane's team members came from Apple’s design and engineering teams 11 Wiggers, Kyle. “Humane, a Secretive AI Startup Founded by Ex-Apple Employees, Raises Another $100M.” TechCrunch, March 8, 2023. https://techcrunch.com/2023/03/08/humane-the-secretive-ai-startup-founded-by-ex-apple-employees-raises-another-100m/. , the exact number of ten principles is no coincidence. Apple's design is heavily inspired by the work of Dieter Rams at Braun 12 Brill, Rachel. “An Apple Inspiration.” Cooper Hewitt Smithsonian Design Museum, September 24, 2013. https://www.cooperhewitt.org/2013/09/24/an-apple-inspiration/. , and we can draw a direct connection between Rams’ Ten Principles for Good Design and Humane’s Ten Principles for Good AI. One look at the posters that display Rams’ Ten Principles versus Humane’s version for AI suffices to confirm their similarities and leaves the question if humane might have one in their office.

Before we look into the difference between individual principles, it is crucial to notice the extreme difference in context. For one, we live in a different century, and as Darius Ou states, “If we always follow the rules set by designers who lived in the 20th century—but we live in the 21st century—then what are we following? Are we blindly following?” 13 Zhuang, Justin. “Lessons in the ‘New Ugly’ School of Design.” Aiga Eye on Design, January 11, 2019. https://eyeondesign.aiga.org/schooled-in-the-new-ugly-lessons-from-darius-ous-autotypography/. Second, and even more significantly, while Ram's Ten Rules for Good Design focus on the practice of design, Humane's Ten Principles for Good AI assume responsibility for a whole company, its users, non-users affected by the technology and all their activities related to a specific piece of technology. This expands the scope of the Ten Principles from a single discipline to a transdisciplinary level and leaves us with the question: Are Ten Design Principles enough to govern an AI hardware product? A product that Imran Chaudhri and Bethany Bongiorno, the Co-Founders of Humane, describe as “an opportunity for people to take AI with them everywhere and to unlock a new era of personal mobile computing which is seamless, screenless and sensing” 14 Humane. “Humane Reveals the Name of First Device, the Humane AI Pin.” Humane News, 2023. https://hu.ma.ne/media/humane-names-first-device-humane-ai-pin. .

Health data, pictures of our children, and consumer behaviour. There are strong ethical and societal reasons for keeping such personal data private. Humane suggests its commitment to this in its 4th Principle, which describes that “Good AI is trustworthy” and “should be designed with the utmost respect for privacy and security, ensuring that users' data is protected and their trust is earned” 15 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . In the AI Pin specifically, they honour this principle by not using wake words or any always-on microphones that might infringe on a user's privacy 16 Humane. Aipin Trust, 2023. https://hu.ma.ne/aipin/trust. . But the introduction of devices by the likes of the AI Pin and Google Glass excludes one group from this protection. Humane’s principle around trustworthiness is specifically limited to users, leaving those behind who do not use the product but are affected by it. It gives the users autonomy; they can control the technology. However, the autonomy of its users infringes on the autonomy of non-users 17 Zhou, Katherine M. “Ethics 101 for Designers” DESIGN ETHICALLY , 2019. https://www.designethically.com/framework2. . Those who record are in control, while those who are recorded are not.

It would be easy to now draw parallels between the Humane AI Pin and body cams worn by police. But in reality there is one big difference that, while controversial, legitimises the privacy concerns of body cams. To illustrate this point, we can again draw from previous criticism of Google Glass, because “What distinguishes Glass from CCTV surveillance is lack of due security cause to focus on single individuals and lack of assurance that the recorded data will be managed respecting the legal requirements of intent and proportionality” 18 Kudina, Olya, and Peter-Paul Verbeek. “Ethics from within: Google Glass, the Collingridge Dilemma, and the Mediated Value of Privacy.” Science, Technology, & Human Values 44, no. 2 (2018): 291–314. https://doi.org/10.1177/0162243918793711. . We can make the same argument for the relationship of body cams and the AI Pin.

For body cams, there are regulations and “Despite costs and privacy concerns due to intrusive video and public records disclosure requirements, workable solutions exist for protecting privacy and making it possible to comply with disclosure laws, all while retaining the evidentiary and accountability benefits of body-worn cameras” 19 Richard Lin, “Police Body Worn Cameras and Privacy: Retaining Benefits While Reducing Public Concerns.” 14 Duke Law & Technology Review (2016): 346-365. https://scholarship.law.duke.edu/dltr/vol14/iss1/15 .

The same can not be said about the AI Pin; we can take a look at a letter from the Privacy Commissioner of Canada, Jennifer Stoddart and 36 of her counterparts to Larry Page, then CEO of Google, in which they raise concerns about Google Glass. Concerns that undoubtedly can be raised around the AI Pin as well. It is enough to replace Google Glass with Humane’s AI Pin while reading this quote:

“As you have undoubtedly noticed, Google Glass has been the subject of many articles that have raised concerns about the obvious, and perhaps less obvious, privacy implications of a device that can be worn by an individual and used to film and record audio of other people. Fears of ubiquitous surveillance of individuals by other individuals, whether through such recordings or through other applications currently being developed, have been raised” 20 Office of the Privacy Commissioner of Canada. Data Protection Authorities urge google to address Google Glass concerns, June 18, 2013. https://www.priv.gc.ca/en/opc-news/news-and-announcements/2013/nr-c_130618/#fn2. .

This leads us to the next principle proposed by Humane: Good AI is transparent. With this, “AI should be transparent in its decision-making processes, allowing users to understand and trust the technology” 21 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . But with the possibility of ubiquitous surveillance in mind, Humane’s principle again only benefits those who record, not those whose data is recorded and processed. What, in the case of Google Glass, led to a new term, the Glasshole, someone who won't remove the device and is expected to record every interaction 22 Urban Dictionary. “Glasshole.” Urban Dictionary, 2013. https://www.urbandictionary.com/define.php?term=Glasshole. can, in general, be described as a disruption of our perceived privacy and autonomy in public as well as private settings. Turned on or off, the possibility of being recorded alone changes behaviours and disrupts mutual respect and consideration, whether through Google Glass or the AI Pin. One big difference between these devices and smartphones is the signalling. While the motion of getting your phone out of the pocket or raising it to take a picture is a pretty clear signal, the positioning of the AI Pin, similar to bodycams, is a more discrete signal that a recording is just a click of a button away.

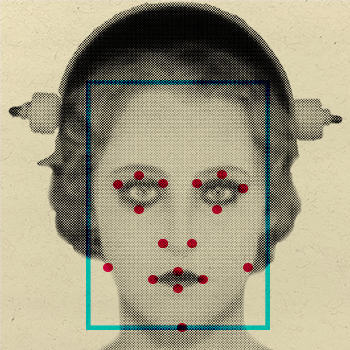

With the AI Pin, there is no obvious gesture. There is just an approximately 13mm by 1.5mm small light called the Trust Light. It is described as a “A public light for peace of mind” 23 Humane. Aipin Trust, 2023. https://hu.ma.ne/aipin/trust. but, and we can once again cite an article on Google Glass here, “Unless you're in the habit of checking everyone seated within 50 feet of you for red LED glow, you can assume that once Google Glass [or the AI Pin] becomes popular your chances of enjoying some privacy in the outside world have been diminished” 24 Clarke, Chris. “Keep Your Google Glass Away from Me.” PBS SoCal, March 26, 2013. https://www.pbssocal.org/socal-focus/keep-your-google-glass-away-from-me. . So, unless you can easily spot the difference in the picture above and think an LED turning on is obvious enough, we need better signals, or it might just be a matter of time until we see the first AiHoles.

But with transparency in “Good AI” also comes the risk of AI hallucinating, where “AI algorithms produce outputs that are not based on training data, are incorrectly decoded by the transformer or do not follow any identifiable pattern. In other words, it “hallucinates” the response” 25 “What Are Ai Hallucinations?” IBM, 2023. https://www.ibm.com/topics/ai-hallucinations. . We can even see examples of hallucinations and other errors in the AI Pin’s launch video, in which the AI misstates the amount of protein in the almonds it analysed, suggests the wrong spot to see the next solar eclipse and provides the incorrect retail price for the book it scans. While Humane has “fixed” all of these in their launch video by just correcting the audio and text (not very transparent of them), they could have had real-world implications.

These consequences are worrying even in the three examples cited: on the one hand, the financial burden of buying a flight to Australia instead of Mexico or spending too much on 28$ book, and on the other hand the risk of nutrition-related health issues from overestimating the amount of protein in almonds. On top of that, OpenAI’s GPT, the AI that actually powers the AI Pin, is known for hallucinations with a far greater impact: recently, a Lawyer relied on false cases made up by ChatGPT during trial. He was not only fined 5000$ but also believed them to be real and defended them during the trial, even after their existence was questioned 26 Merken, Sara. “New York Lawyers Sanctioned for Using Fake CHATGPT Cases in Legal Brief.” Reuters, June 26, 2023. https://www.reuters.com/legal/new-york-lawyers-sanctioned-using-fake-chatgpt-cases-legal-brief-2023-06-22/. .

While research is heavily focused on reducing the number of hallucinations that occur, this is an important factor when it comes to transparency. OpenAI’s ChatGPT and Google’s Bard warn you directly under the input field that users should double-check the generated information as the Chatbot can make mistakes. Conversely, Humane’s AI Pin leaves no room for doubt that everything your AI Pin tells you is correct.

With this, we get to a more high-level principle Humane has added: “Good AI is ethical” 27 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . This statement is as informative as describing a painting as nice. While the statement is correct, it is also incredibly vague - as there are, in Western culture alone, multiple schools of thought around what is considered ethically right or wrong. Right or wrong also does not always mean legal. As Kathrine Zhou describes, “After all, slavery was legal at one point…but that does not mean it was ever ethical” 28 Zhou, Katherine M. “Ethics 101 for Designers” DESIGN ETHICALLY , 2019. https://www.designethically.com/framework2. .

If we read further into the description Humane provides, we learn that “AI should be designed with an understanding of the ethical implications of its use, and should always prioritize the well-being of its users” 29 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . In the first public unveiling of Humane AI, Imran Chaudri, Humane’s CEO, asked the AI whether he could eat a chocolate bar that he held in front of the camera. The AI informed him that he had an intolerance to one of the ingredients. After Chaudri responding that he would eat the bar anyway, on the basis that the user maintains full autonomy, the AI tells him to enjoy his chocolate bar 30 Chaudhri, Imran. “The Disappearing Computer - and a World Where You Can Take Ai Everywhere.” Imran Chaudhri: The disappearing computer - and a world where you can take AI everywhere | TED Talk, April 2023. https://www.ted.com/talks/imran_chaudhri_the_disappearing_computer_and_a_world_where_you_can_take_ai_everywhere. .

This brings us to our first dilemma, in which the autonomy of the user is in direct conflict with the AI’s priority for the user's well-being. If this didn't happen in the TED Talk studio but in a remote area with a long response time for EMTs, and if the user wasn't just intolerant but life-threatening allergic, would the AI, knowing all this, still prioritise autonomy over well-being or would it try to convince the user not to eat the chocolate bar?

We can go even further and ask whether this means that a good AI should not even consider the well-being of non-users. Or if it prioritises the well-being of the user over the well-being of others. Would AI prompt a user to call an ambulance after witnessing a crash? Would AI call the police when it detects it is being used to commit a crime that hurts others? Would AI try to stop the user from feeding their kids expired food or food they are allergic to? What if we add hallucination to the mix? What if your AI Pin all of a sudden made up fake news, was politically biased or mislabeled people as criminals?

Zooming out from the details around some of the Ten Principles for Good AI, I want to make clear that these arguments are not a critique of the technology itself but the way in which such technology is governed. I truly believe in the benefits we can gain from AI and products like the AI Pin, but also believe that these products need to be governed based on their possible implications. The implications we’ve seen so far might seem small now but will have vast consequences moving forward. In the same way, we need to stop asking what privacy is and instead ask what it is for 31 Hartzog, Woodrow. “What is Privacy? That's the Wrong Question”, 88 The University of Chicago Law Review 1677 (2021). https://ssrn.com/abstract=3970890 ; we need to ask what transparency, honesty, and ethics regarding AI are for. From a lack of privacy to missing signals and ethical uncertainty, Humane Inc.’s 10 Principles for Good AI seems to neglect this question and, with it, everyone who is not the user.

Comparing the Ten Principles side-by-side easily reveals their equivalencies. Good AI and good design are both supposed to be innovative (1) and timeless (9). They both refer to some level of accessibility, human-centeredness and inclusivity (6,8,10) as well as usefulness (2) and efficiency (3) 32 Humane Inc. Good ai is humane., April 20, 2023. https://hu.ma.ne/media/designing-for-the-ai-era. . The latter principle is actually a step back from the original environmentally friendliness proposed by Dieter Rams, probably due to the high carbon emissions of running data centres 33 Steven Gonzalez Monserrate, “The Cloud Is Material: On the Environmental Impacts of Computation and Data Storage,” MIT Case Studies in Social and Ethical Responsibilities of Computing,1 January 27, 2022, https://mit-serc.pubpub.org/pub/the-cloud-is-material/release/1. and training large language models 34 Karen Hao, “Training a Single AI Model Can Emit as Much Carbon as Five Cars in Their Lifetimes,” MIT Technology Review, June 6, 2019, https://www.technologyreview.com/2019/06/06/239031/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/. . While there is no real equivalent to Ram’s principle that good design is aesthetic, Humane created new principles around Good AI being ethical and trustworthy and reframed “honesty” into “transparency”.

Another example can illuminate why the Ten Principles alone are not enough to ensure a secure product. The electronic vape JUUL would pass all Ten Principles for Good Design by Dieter Rams. Yet, clearly, the Ten Principles for Good Design were not good enough to stop the vaping epidemic caused by JUUL 35 FDA. “FDA Denies Authorization to Market Juul Products.” U.S. Food and Drug Administration, June 2022. https://www.fda.gov/news-events/press-announcements/fda-denies-authorization-market-juul-products. . Humane’s AI Pin, unsurprisingly, would pass all of their self-imposed Principles for Good AI. By almost step-by-step following the Google Glass Playbook, Humane creates an atmosphere in which they prioritise the user instead of considering all the implications of this still controllable sociotechnical experiment that will define the direction AI will take.

Dieter Rams published the Ten Principles for Good Design in 1995 36 Design Wissen. “10 Thesen von Dieter Rams Über Gutes Produktdesign - Designwissen, Positionen Zum Design.” DesignWissen, June 14, 2022. https://designwissen.net/10-thesen-von-dieter-rams-uber-gutes-produktdesign/. : the year Amazon was launched 37 Michigan Journal of Economics. “The History of Amazon and Its Rise to Success.” Michigan Journal of Economics, April 30, 2023. https://sites.lsa.umich.edu/mje/2023/05/01/the-history-of-amazon-and-its-rise-to-success/. , three years before Google was founded 38 Google. “How We Started and Where We Are Today.” Google. Accessed November 19, 2023. https://about.google/intl/ALL_us/our-story/. , and two years before a computer beat a chess grandmaster for the first time 39 Goodrich, Joanna. “How IBM’s Deep Blue Beat World Champion Chess Player Garry Kasparov.” IEEE Spectrum, January 25, 2021. https://spectrum.ieee.org/how-ibms-deep-blue-beat-world-champion-chess-player-garry-kasparov. . As much as I, as an industrial designer, have the deepest respect for Dieter Rams, these rules are no match for the world we live in now. Functional rules to create and govern good AI must build on the lived experience and learnings of the 21st century, like Dunne and Raby’s A/B Manifesto 40 Dunne, Anthony, and Fiona Raby. “A/B Manifesto.” Dunne & Raby, 2009. https://dunneandraby.co.uk/content/projects/476/0. . Apple has distanced itself from its total reliance on design, choosing not to name a new Head of Hardware Design in 2023 41 Peters, Jay. “Apple Won’t Name a New Head of Hardware Design.” The Verge, February 2, 2023. https://www.theverge.com/2023/2/2/23582932/apple-industrial-design-evans-hankey-jony-ive. However, it seems that the idea that design can save the world still exists within the Apple-trained design team at Humane.

“I believe that this will be the most important and beneficial technology Humanity has yet invented, and I also believe that if we are not careful about it can be quite disastrous, and so we have to navigate it carefully”

: OpenAI Founder Sam Altman acknowledged 42 Roose, Kevin, Casey Newton, and Sam Altman. “Mayhem at Openai + Our Interview with Sam Altman.” The New York Times, November 20, 2023. https://www.nytimes.com/2023/11/20/podcasts/mayhem-at-openai-our-interview-with-sam-altman.html. . Films or shows, like the hugely successful “Black Mirror”, imagine promising technologies evolving to twisted high-tech dystopias and demonstrate the broad range of disastrous consequences that could arise from irresponsible sociotechnical experiments 43 IMDb. “Black Mirror.” IMDb, December 4, 2011. https://www.imdb.com/title/tt2085059/. . It is just a matter of time before we will have our very own version of a Black Mirror episode if those who invent, incubate, and invest in AI don't challenge the rules of the past and don’t substitute them to govern the present.

Sheng-Hung Lee, who worked on the first Chinese translation of Dieter Ram's complete works into Chinese, reflected that “We have already built too many close-to-perfect products as a singular unit in system.” and asked, “How do we connect dots (product as single unit) by creating a platform-level system that can keep evolving…” 44 Lee, Sheng-Hung. “Industrial Design Paradigm Shift: Reflection on Translating Dieter Rams Portfolios.” DesignWanted, November 2, 2023. https://designwanted.com/dieter-rams-industrial-design-paradigm-shift/. . The key here is evolution. Rather than thinking about how to replace the existing Ten Principles with a new set of static rules, that will inevitably become obsolete, we should focus on building a dynamic system of governance that allows us to navigate a world and its technological landscape that is changing a lot faster than the world of Dieter Ram’s before 1995.